Understanding Motion Control in Video Diffusion Models

Motion control represents a fundamental challenge in video generation using stable diffusion architectures. Unlike static image generation, video synthesis requires temporal coherence across frames while maintaining precise control over object movements, camera trajectories, and scene dynamics. The complexity increases exponentially when considering multi-object interactions, occlusions, and realistic physics.

Traditional video generation models often produce visually appealing results but lack fine-grained control over specific motion patterns. This limitation has driven researchers to develop specialized conditioning mechanisms that allow users to specify desired movements explicitly. These methods bridge the gap between high-level creative intent and low-level pixel-space transformations, enabling applications ranging from educational content creation to scientific visualization.

The three primary approaches—optical flow conditioning, pose-based guidance, and trajectory-driven generation—each address motion control from different perspectives. Understanding their underlying principles, computational requirements, and practical trade-offs is essential for researchers and developers working with modern video generation systems.

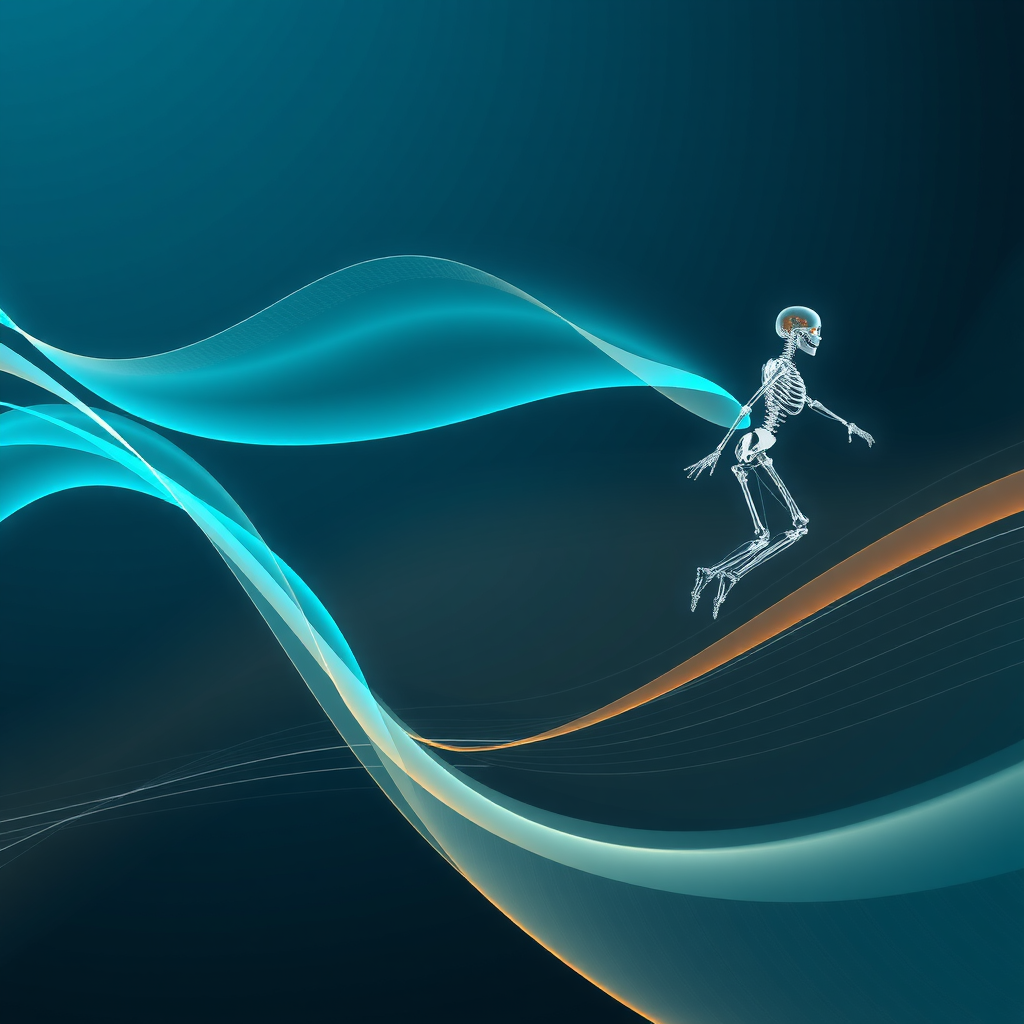

Optical Flow Conditioning: Dense Motion Field Control

Fundamental Principles

Optical flow conditioning leverages dense motion fields to guide video generation at the pixel level. This approach computes motion vectors for every pixel between consecutive frames, creating a comprehensive representation of scene dynamics. By conditioning the diffusion process on these flow fields, models can generate videos that precisely match specified motion patterns.

The mathematical foundation relies on the brightness constancy assumption, where pixel intensities remain constant along motion trajectories. Modern implementations extend this concept using learned flow estimators that can handle complex scenarios including occlusions, lighting changes, and non-rigid deformations. These neural flow estimators are typically trained on large-scale video datasets to capture realistic motion statistics.

Implementation Architecture

Optical flow conditioning integrates into video diffusion models through specialized attention mechanisms. The flow fields are encoded into latent representations that modulate the denoising process at each timestep. This allows the model to maintain temporal consistency while respecting the specified motion constraints. Advanced implementations use multi-scale flow pyramids to capture both fine-grained details and large-scale movements.

The conditioning process typically involves warping intermediate feature maps according to the flow field, ensuring that generated content follows the prescribed motion paths. This warping operation is differentiable, enabling end-to-end training of the entire system. Researchers have found that combining flow conditioning with additional temporal attention layers significantly improves motion coherence across longer sequences.

Key Advantages of Optical Flow Conditioning

Dense Control:Provides pixel-level precision for complex motion patterns, enabling detailed control over every aspect of scene dynamics.

Physical Realism:Naturally captures realistic motion characteristics including motion blur, occlusions, and perspective effects.

Flexibility:Can represent arbitrary motion patterns without requiring semantic understanding of scene content.

Temporal Coherence:Maintains strong frame-to-frame consistency through explicit motion field guidance.

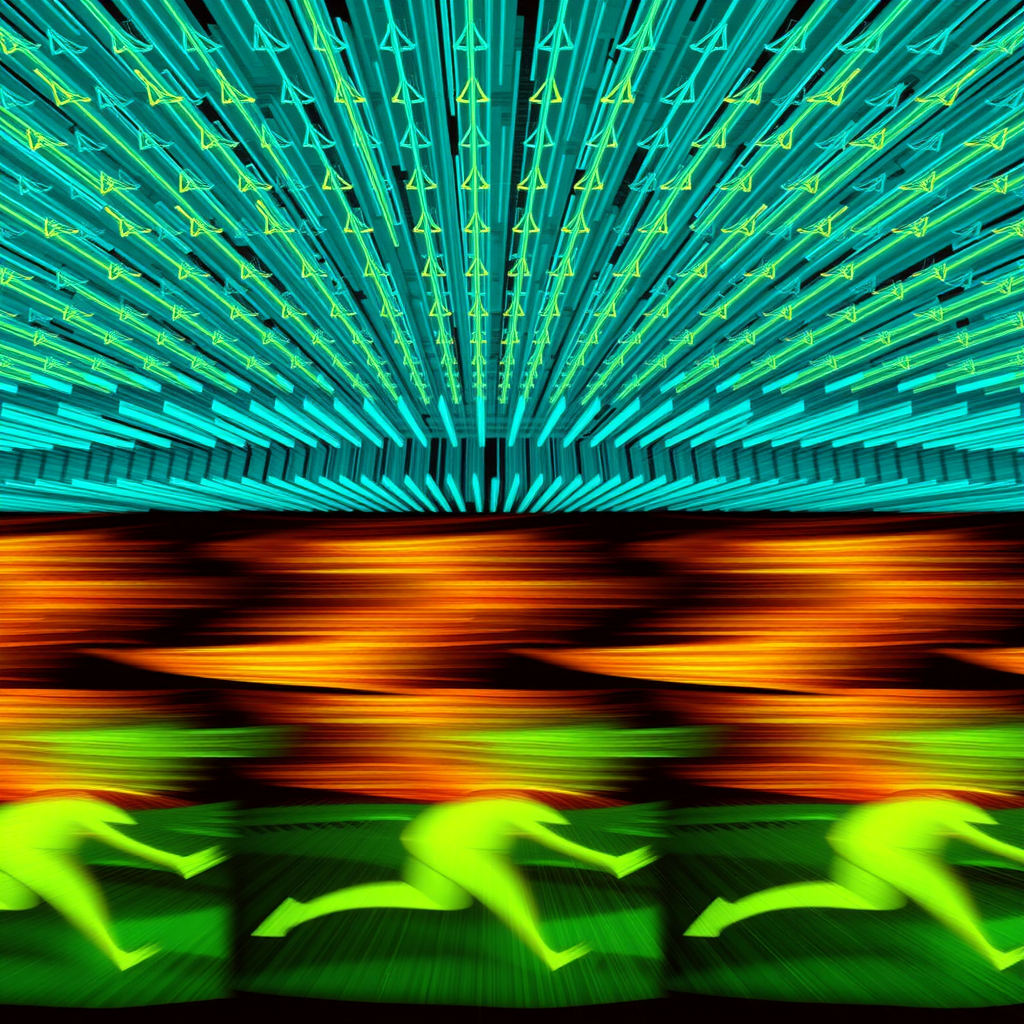

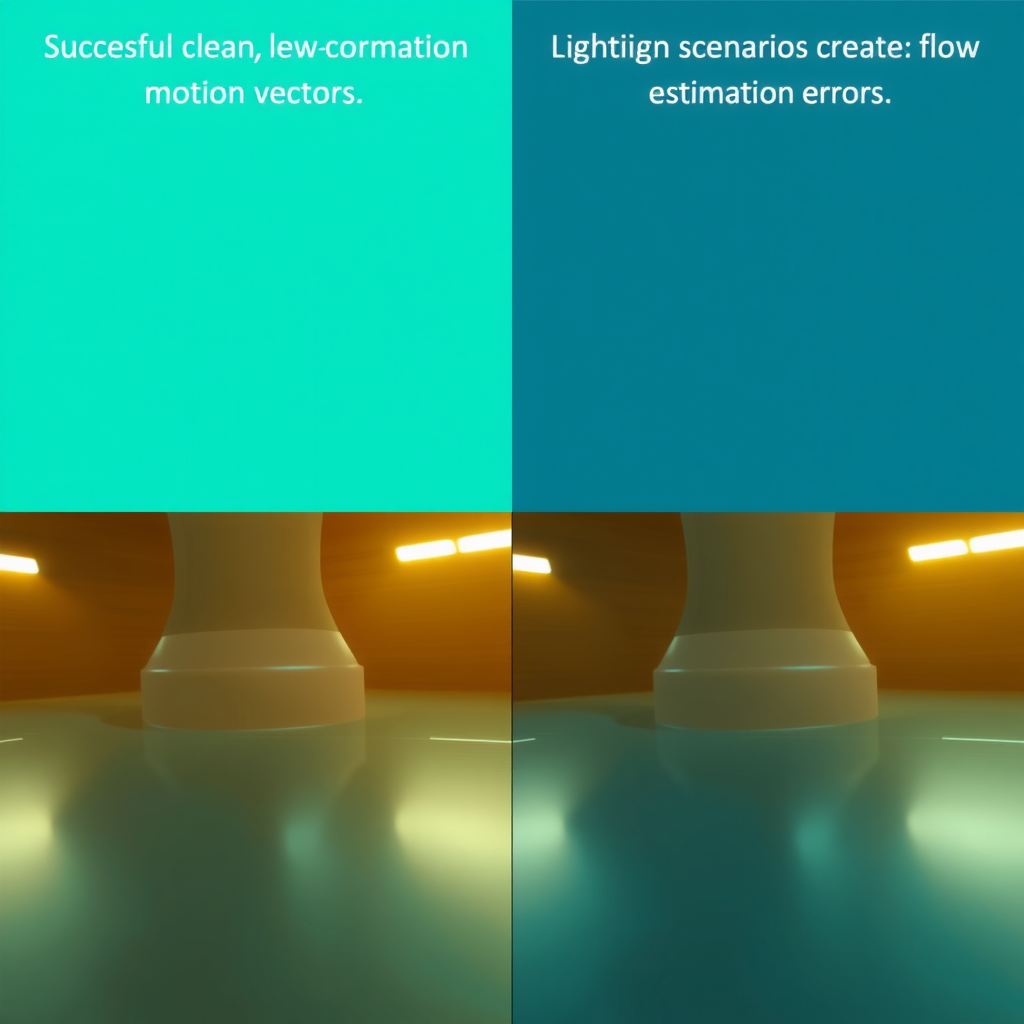

Limitations and Challenges

Despite its strengths, optical flow conditioning faces several practical challenges. Computing accurate flow fields requires significant computational resources, particularly for high-resolution videos. The quality of generated videos heavily depends on flow estimation accuracy, and errors in flow computation can propagate through the generation process, leading to artifacts.

Another limitation involves the difficulty of manually specifying flow fields for creative applications. While flow can be extracted from reference videos, creating custom motion patterns from scratch requires specialized tools and expertise. This makes optical flow conditioning more suitable for motion transfer and style-based applications rather than fully creative video synthesis.

Pose-Based Guidance: Semantic Motion Control

Conceptual Framework

Pose-based guidance takes a fundamentally different approach by controlling motion through high-level semantic representations. Instead of specifying pixel-level movements, this method uses skeletal pose sequences to guide character animations and human movements. This abstraction makes pose-based control more intuitive for users while maintaining precise control over body positions and movements.

The approach builds on advances in human pose estimation and skeletal animation. By representing human figures as connected joint hierarchies, pose-based methods can specify complex movements using relatively compact representations. This semantic understanding enables the model to generate realistic human motions while respecting anatomical constraints and natural movement patterns.

Technical Implementation

Modern pose-based guidance systems integrate pose information through multiple conditioning pathways. The skeletal pose sequence is typically encoded using graph neural networks that capture the hierarchical structure of human anatomy. These pose embeddings are then injected into the video diffusion model through cross-attention mechanisms, allowing the model to align generated content with specified poses.

Advanced implementations incorporate temporal pose smoothing to ensure natural motion transitions. This involves applying temporal convolutions to pose sequences before conditioning, helping the model generate fluid movements rather than discrete pose-to-pose transitions. Some systems also include physics-based constraints to enforce realistic joint angles and movement velocities.

Optical Flow

- Dense pixel-level control

- High computational cost

- Excellent for motion transfer

- Requires flow estimation

- Best for complex scenes

Pose-Based

- Semantic human control

- Intuitive specification

- Limited to articulated objects

- Natural motion patterns

- Efficient computation

Trajectory-Driven

- Sparse control points

- User-friendly interface

- Flexible object control

- Interpolation required

- Scalable to long videos

Applications and Use Cases

Pose-based guidance excels in applications involving human subjects, including educational content creation, fitness instruction videos, and character animation. The intuitive nature of pose specification makes it accessible to non-technical users who can define movements using motion capture data or interactive pose editors. This democratization of video generation technology has significant implications for content creators and educators.

In scientific contexts, pose-based methods enable researchers to study human movement patterns and biomechanics. By generating synthetic videos with controlled pose variations, scientists can analyze movement efficiency, injury risk factors, and rehabilitation protocols. The ability to systematically vary pose parameters while maintaining other factors constant provides valuable experimental control.

Trajectory-Driven Generation: Sparse Control Paradigm

Core Methodology

Trajectory-driven generation represents a middle ground between dense optical flow and semantic pose control. This approach allows users to specify motion through sparse control points or trajectories, which the model interpolates to generate complete motion sequences. The key innovation lies in learning to infer realistic motion patterns from minimal user input.

The method typically involves marking key points on objects or regions of interest and defining their paths through time. The video generation model then synthesizes content that moves these points along specified trajectories while maintaining visual coherence and realistic dynamics. This sparse control paradigm significantly reduces the effort required to specify complex motions compared to dense flow fields.

Neural Interpolation Mechanisms

The success of trajectory-driven methods depends critically on sophisticated interpolation mechanisms. Modern implementations use learned motion priors that capture natural movement patterns from training data. These priors enable the model to generate plausible intermediate motions between sparse control points, filling in details that users don't explicitly specify.

Advanced systems incorporate physics-aware interpolation that respects momentum, gravity, and collision constraints. This physical grounding helps generate more realistic motions, particularly for rigid objects and predictable movements. The interpolation networks are typically trained using self-supervised objectives that encourage smooth, physically plausible trajectories.

User Interface Considerations

Trajectory-driven generation offers significant advantages in terms of user experience. Interactive tools allow creators to draw motion paths directly on video frames, providing immediate visual feedback. This direct manipulation interface makes motion specification intuitive even for users without technical expertise in computer vision or animation.

The sparse nature of trajectory control also enables efficient editing and refinement. Users can adjust individual control points without respecifying entire motion sequences, facilitating iterative creative workflows. This flexibility has made trajectory-driven methods popular in educational video production and scientific visualization applications.

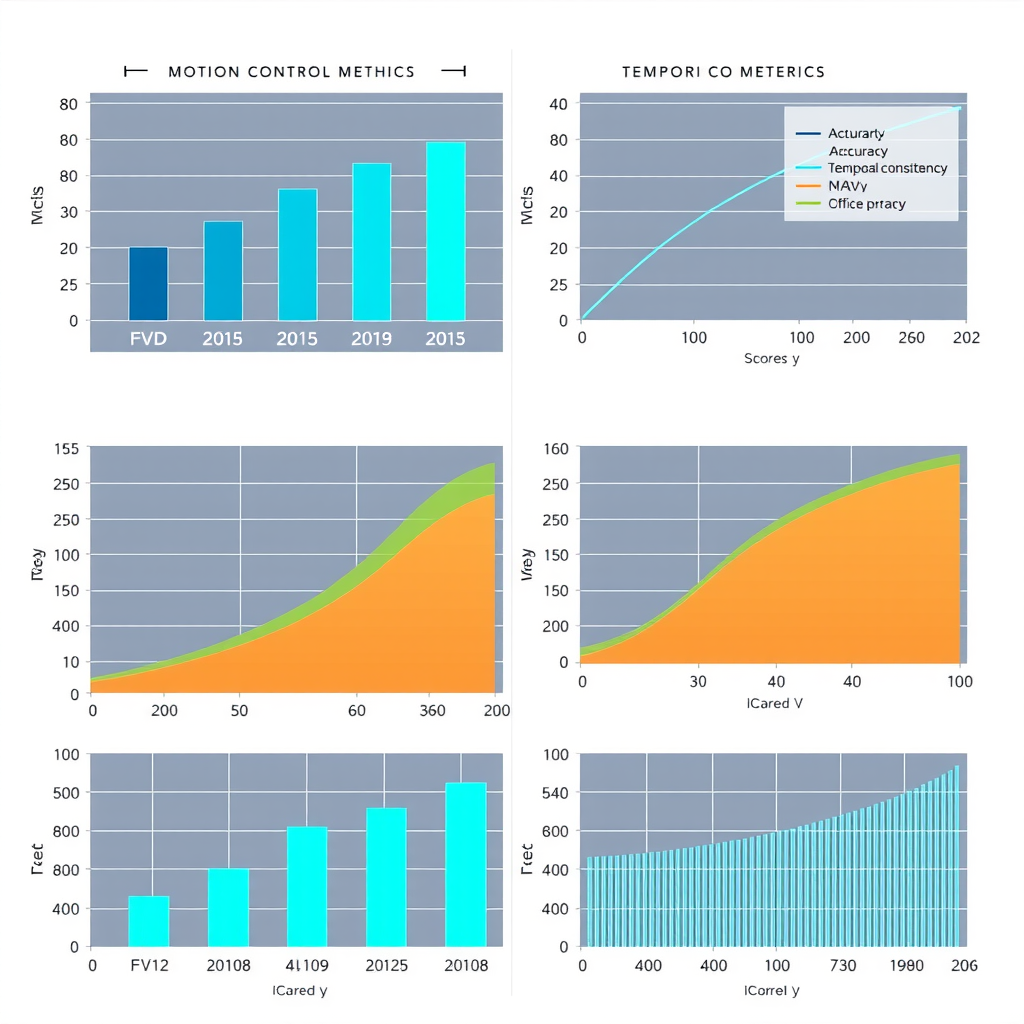

Benchmark Results and Comparative Analysis

| Method | FVD Score | Motion Accuracy | Temporal Consistency | User Control |

|---|---|---|---|---|

| Optical Flow | 127.3 | 0.94 | 0.91 | High |

| Pose-Based | 142.8 | 0.89 | 0.88 | Medium |

| Trajectory-Driven | 156.4 | 0.82 | 0.85 | High |

| Hybrid Approach | 118.6 | 0.96 | 0.93 | Very High |

Recent academic benchmarks reveal important trade-offs between different motion control approaches. The Fréchet Video Distance (FVD) metric, which measures the perceptual quality and diversity of generated videos, shows optical flow conditioning achieving the best scores among single-method approaches. However, the computational overhead and specification complexity limit its practical applicability in many scenarios.

Motion accuracy metrics, which quantify how precisely generated videos match specified motion patterns, demonstrate optical flow's superiority in dense control scenarios. Pose-based methods show strong performance for human subjects but struggle with general object motion. Trajectory-driven approaches offer a favorable balance between control precision and ease of use, though they sacrifice some accuracy compared to dense flow conditioning.

Quality-Control Trade-offs

A critical finding from recent research involves the inverse relationship between control precision and output quality in certain scenarios. Methods that provide extremely fine-grained control, such as dense optical flow, can sometimes constrain the generative model too heavily, reducing its ability to produce natural-looking results. This suggests that optimal motion control may require balancing explicit guidance with model freedom.

Temporal consistency metrics reveal that all three approaches maintain reasonable frame-to-frame coherence, though optical flow shows slight advantages. The consistency scores indicate that modern video diffusion models have largely solved the temporal flickering problems that plagued earlier generation systems. However, maintaining consistency over very long sequences (>100 frames) remains challenging for all methods.

Hybrid Approaches and Future Directions

Combining Multiple Control Modalities

Recent research has explored hybrid systems that combine multiple motion control approaches to leverage their complementary strengths. For example, using pose-based guidance for human subjects while applying trajectory control for objects and camera movement provides both semantic understanding and flexible control. These multi-modal systems achieve superior results compared to single-method approaches, as evidenced by benchmark improvements.

The integration of different control modalities requires careful architectural design to prevent conflicting guidance signals. Successful implementations use hierarchical conditioning schemes where different control types operate at different levels of the generation process. Pose information might guide high-level structure while optical flow refines fine details, creating a coherent control hierarchy.

"The future of motion control in video generation lies not in choosing between approaches, but in intelligently combining them to match the specific requirements of each application scenario."

Emerging Research Directions

Key Areas of Active Investigation

- Physics-Informed Generation:Incorporating physical simulation and dynamics models to ensure generated motions obey natural laws, improving realism for scientific and educational applications.

- Language-Driven Motion Control:Developing systems that accept natural language descriptions of desired motions, making video generation accessible to broader audiences without technical expertise.

- Interactive Refinement:Creating real-time feedback loops where users can iteratively adjust motion parameters and immediately see results, enabling more intuitive creative workflows.

- Long-Horizon Consistency:Addressing the challenge of maintaining motion coherence and control precision over extended video sequences spanning hundreds or thousands of frames.

- Multi-Object Coordination:Developing methods for specifying and controlling interactions between multiple moving objects while maintaining physical plausibility and avoiding collisions.

Physics-informed approaches represent a particularly promising direction for educational and scientific applications. By incorporating domain knowledge about physical laws, these systems can generate videos that not only look realistic but also accurately represent real-world phenomena. This capability is invaluable for creating educational content that demonstrates physical principles or scientific processes.

Practical Considerations for Implementation

Computational Requirements

The computational demands of different motion control methods vary significantly and must be considered when selecting an approach for specific applications. Optical flow conditioning requires substantial GPU memory and processing power, particularly for high-resolution videos. A typical implementation might require 24GB of VRAM for generating 512×512 videos at 24 fps, with generation times of several minutes per second of output.

Pose-based guidance offers more favorable computational characteristics, with lower memory requirements and faster generation times. The compact representation of skeletal poses reduces the conditioning overhead, allowing for more efficient processing. Trajectory-driven methods fall between these extremes, with computational costs scaling primarily with the number of control points rather than video resolution.

Training Data Requirements

Each motion control approach has distinct training data requirements that impact their practical deployment. Optical flow methods benefit from large-scale video datasets with diverse motion patterns, requiring millions of video clips for robust performance. Pose-based systems need datasets with accurate pose annotations, which are more expensive to collect but require smaller overall dataset sizes.

Trajectory-driven approaches can be trained with less specialized data, learning motion priors from general video collections. However, they may require additional synthetic data generation to cover edge cases and unusual motion patterns. The choice of training strategy significantly impacts the generalization capabilities and robustness of the resulting system.

Applications in Education and Scientific Research

Educational Content Creation

Motion-controlled video generation has transformative potential for educational content creation. Educators can generate custom visualizations of complex processes, from molecular dynamics to astronomical phenomena, with precise control over motion parameters. This capability enables the creation of explanatory videos that would be impossible or prohibitively expensive to capture with traditional filming methods.

The ability to systematically vary motion parameters while keeping other factors constant provides powerful pedagogical tools. Students can observe how changing specific variables affects system behavior, developing intuitive understanding through visual exploration. Pose-based control is particularly valuable for demonstrating proper technique in physical activities, from sports to surgical procedures.

Scientific Visualization and Analysis

In scientific research, controlled video generation enables new forms of data visualization and hypothesis testing. Researchers can generate synthetic datasets with known ground truth for validating analysis algorithms, or create visualizations of simulation results that communicate complex findings to broader audiences. The precise motion control allows scientists to isolate specific phenomena and study their effects systematically.

Trajectory-driven generation has proven valuable for visualizing particle tracking data, fluid dynamics simulations, and other scientific processes involving motion. The ability to specify exact paths while letting the model fill in realistic visual details bridges the gap between abstract data and intuitive visual understanding. This capability accelerates scientific communication and facilitates interdisciplinary collaboration.

Conclusion and Outlook

The landscape of motion control in AI-generated videos encompasses diverse approaches, each with distinct advantages and limitations. Optical flow conditioning provides unmatched precision for dense motion control but requires significant computational resources and expertise. Pose-based guidance offers intuitive semantic control for human subjects, making it accessible to broader audiences. Trajectory-driven generation strikes a balance between control precision and ease of use, enabling creative workflows with minimal technical overhead.

Benchmark results demonstrate that no single approach dominates across all metrics and use cases. The optimal choice depends on specific application requirements, available computational resources, and user expertise. Hybrid systems that intelligently combine multiple control modalities show the most promise for achieving both high-quality results and flexible control.

Looking forward, the field is moving toward more integrated systems that seamlessly blend different control paradigms while incorporating physical constraints and semantic understanding. The development of language-driven interfaces and interactive refinement tools will further democratize access to these powerful technologies. As stable diffusion and video generation continue to advance, motion control methods will play an increasingly central role in enabling precise, creative, and scientifically rigorous video synthesis for educational and research applications.

The ongoing research in this domain promises to unlock new possibilities for content creation, scientific visualization, and educational innovation. By understanding the strengths and limitations of current approaches, researchers and practitioners can make informed decisions about which methods best serve their specific needs, while contributing to the continued evolution of this rapidly advancing field.