Understanding Latent Space in Video Diffusion Models

Latent space manipulation represents one of the most powerful techniques in modern video generation research. Unlike traditional pixel-space operations, working within the latent representation of video diffusion models allows researchers to perform semantic edits, interpolations, and transformations that maintain temporal coherence while achieving remarkable control over generated content.

The latent space of a video diffusion model serves as a compressed, semantically meaningful representation of visual information. Each point in this high-dimensional space corresponds to a specific configuration of visual features, and the geometry of this space encodes relationships between different visual concepts. Understanding how to navigate and manipulate this space is fundamental to advancing video generation capabilities.

Recent advances in stable diffusion architectures have demonstrated that latent representations can capture not only spatial features but also temporal dynamics, making them particularly suitable for video generation tasks. The key challenge lies in developing methods that can effectively traverse this space while maintaining both visual quality and temporal consistency across frames.

This comprehensive guide explores the theoretical foundations, practical techniques, and experimental methodologies for manipulating latent representations in video diffusion models. We examine interpolation strategies that enable smooth transitions between different visual states, semantic editing approaches that allow targeted modifications of specific attributes, and conditioning methods that provide fine-grained control over the generation process.

Interpolation Strategies for Temporal Coherence

Linear Interpolation in Latent Space

Linear interpolation represents the most straightforward approach to traversing latent space between two points. Given two latent vectors z₁ and z₂, the interpolated latent vector at parameter t ∈ [0,1] can be computed as z(t) = (1-t)z₁ + tz₂. While conceptually simple, this approach often produces semantically meaningful transitions in well-trained video diffusion models.

The effectiveness of linear interpolation depends heavily on the structure of the learned latent space. In models trained with appropriate regularization techniques, the latent space tends to be relatively smooth, meaning that linear paths between points correspond to gradual, perceptually meaningful changes in the generated video content. However, researchers must be aware that linear interpolation may sometimes traverse regions of low probability density, potentially resulting in artifacts or unrealistic intermediate frames.

Experimental results demonstrate that linear interpolation works particularly well for interpolating between similar visual concepts or slight variations of the same scene. For instance, transitioning between different camera angles of the same object or gradually changing lighting conditions can be achieved effectively through linear interpolation. The key is ensuring that the endpoints of the interpolation represent semantically related concepts within the model's learned representation.

Spherical Linear Interpolation (SLERP)

Spherical linear interpolation offers an alternative approach that accounts for the geometric properties of high-dimensional latent spaces. Unlike standard linear interpolation, SLERP maintains constant magnitude throughout the interpolation path, which can be particularly important when working with normalized latent representations. The SLERP formula computes the interpolated vector as z(t) = (sin((1-t)θ)/sin(θ))z₁ + (sin(tθ)/sin(θ))z₂, where θ is the angle between z₁ and z₂.

This approach proves especially valuable when the latent space exhibits spherical geometry, a property often observed in variational autoencoders and certain diffusion model architectures. By following the geodesic path on the unit hypersphere, SLERP can produce smoother, more natural transitions compared to linear interpolation, particularly when interpolating between distant points in latent space.

Key Insight:The choice between linear and spherical interpolation should be guided by the geometric properties of your specific model's latent space. Empirical testing with your architecture is essential to determine which approach yields superior results for your particular use case.

Semantic Editing Methods and Attribute Manipulation

Direction-Based Editing in Latent Space

Semantic editing through direction-based manipulation leverages the observation that specific directions in latent space often correspond to meaningful semantic attributes. By identifying these directions, researchers can perform targeted edits by moving along them. For example, a direction vector d might correspond to "increasing brightness" or "adding motion blur," allowing edits of the form z_edited = z_original + αd, where α controls the magnitude of the edit.

Discovering these semantic directions typically involves one of several approaches. Supervised methods use labeled data to identify directions that separate examples with different attribute values. Unsupervised techniques employ dimensionality reduction or clustering to discover meaningful axes of variation. More recent approaches use contrastive learning to identify directions that maximize semantic distinctiveness while maintaining visual quality.

The effectiveness of direction-based editing depends critically on the disentanglement properties of the latent space. In highly entangled spaces, moving along a single direction may affect multiple attributes simultaneously, making precise control difficult. Advanced architectures incorporate explicit disentanglement objectives during training to improve the independence of different semantic directions.

Conditional Latent Manipulation

Conditional manipulation techniques extend basic semantic editing by incorporating additional conditioning information to guide the editing process. This approach allows for more nuanced control by specifying not just the direction of change but also contextual constraints that should be maintained. For instance, when editing the pose of a subject in a video, conditional manipulation can ensure that other attributes like appearance and background remain consistent.

Implementation of conditional manipulation typically involves training auxiliary networks that predict appropriate latent modifications given both the current latent state and desired conditioning information. These networks learn to map from the space of edit specifications to the space of latent perturbations, enabling intuitive control interfaces for complex editing operations.

Recent research has demonstrated that conditional manipulation can be particularly powerful when combined with attention mechanisms that identify which regions of the latent representation should be modified to achieve the desired edit. This spatial awareness allows for localized edits that affect only specific objects or regions within the generated video, greatly expanding the range of possible editing operations.

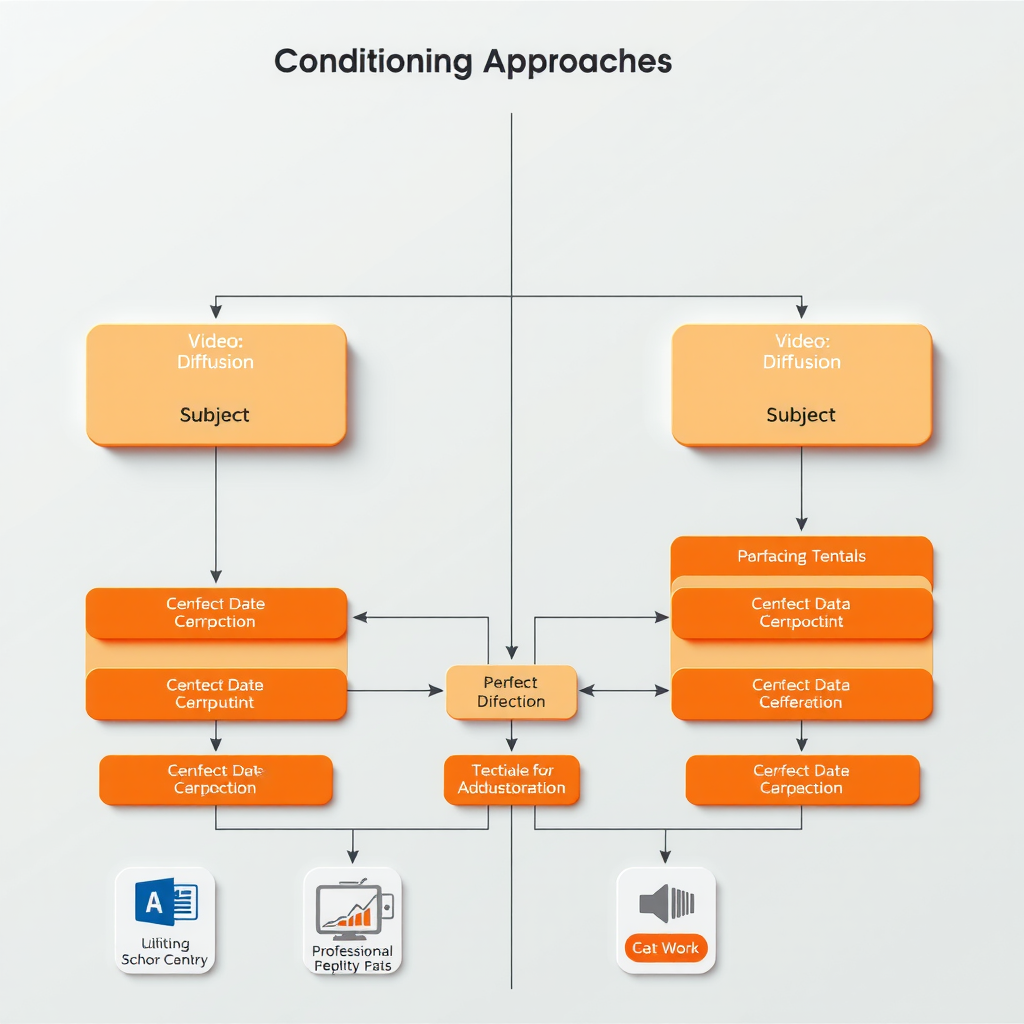

Conditioning Approaches for Fine-Grained Control

Text-Guided Latent Manipulation

Text-guided manipulation represents one of the most intuitive interfaces for controlling video generation through latent space manipulation. By leveraging pre-trained text encoders, researchers can map natural language descriptions to directions in latent space, enabling edits specified through simple text prompts. This approach builds on the success of text-to-image models but extends the concept to temporal domains.

The key challenge in text-guided video manipulation lies in maintaining temporal consistency while applying text-specified edits. Unlike single-image editing, video manipulation must ensure that changes propagate smoothly across frames and that temporal relationships remain coherent. Advanced techniques employ temporal attention mechanisms and frame-to-frame consistency losses to address these challenges.

Experimental implementations have shown that text-guided manipulation works particularly well for high-level semantic changes such as style transfer, mood adjustment, or object replacement. More fine-grained edits may require hybrid approaches that combine text guidance with other conditioning modalities to achieve precise control.

Multi-Modal Conditioning Strategies

Multi-modal conditioning extends beyond text to incorporate diverse sources of control information including sketches, depth maps, segmentation masks, and reference images. Each modality provides different types of constraints on the generation process, and their combination enables unprecedented levels of control over the output video.

Implementing multi-modal conditioning requires careful design of fusion mechanisms that can effectively integrate information from different sources. Common approaches include cross-attention layers that allow the model to selectively attend to different conditioning inputs, adaptive normalization techniques that modulate feature statistics based on conditioning information, and hierarchical conditioning schemes that apply different modalities at different stages of the generation process.

The power of multi-modal conditioning lies in its flexibility. Researchers can specify coarse structure through sketches, fine-tune appearance through reference images, and add semantic guidance through text, all within a single unified framework. This composability makes multi-modal approaches particularly valuable for practical applications where users need intuitive yet powerful control over video generation.

Relationship Between Latent Dimensions and Visual Attributes

Analyzing Latent Space Structure

Understanding the relationship between specific latent dimensions and visual attributes is crucial for effective manipulation. Research has shown that different dimensions of the latent representation often encode different types of information, though the degree of specialization varies significantly across architectures and training procedures.

Systematic analysis of latent space structure typically involves perturbation studies where individual dimensions or groups of dimensions are varied while observing the resulting changes in generated content. These studies reveal that some dimensions may control global properties like overall brightness or color temperature, while others affect more localized features such as object positions or fine-grained textures.

Advanced analysis techniques employ information-theoretic measures to quantify the mutual information between latent dimensions and specific visual attributes. These measures help identify which dimensions are most informative for particular attributes, guiding the development of more efficient manipulation strategies that focus on the most relevant dimensions.

Disentanglement and Independence

The degree of disentanglement in latent space—the extent to which different dimensions independently control different attributes—significantly impacts the ease and precision of manipulation. Highly disentangled representations allow for independent control of different visual properties, while entangled representations require more sophisticated manipulation strategies to achieve targeted edits.

Measuring disentanglement involves evaluating how changes in individual latent dimensions affect multiple visual attributes. Metrics such as the Mutual Information Gap (MIG) and the Separated Attribute Predictability (SAP) score provide quantitative assessments of disentanglement quality. These metrics guide both architecture design and training procedure development aimed at improving disentanglement.

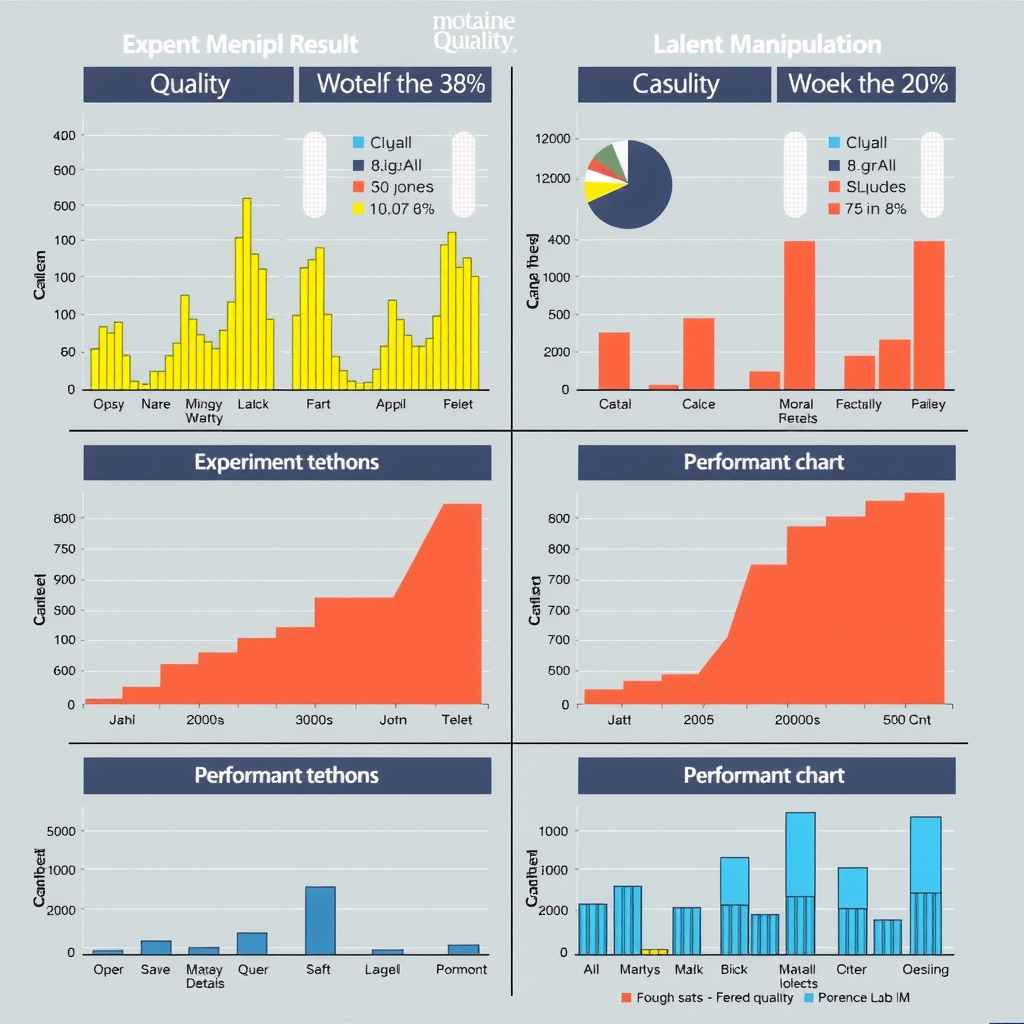

Documented Experiments and Empirical Results

Interpolation Quality Assessment

Extensive experiments comparing different interpolation strategies reveal important insights about their relative strengths and limitations. Quantitative evaluations using metrics such as Fréchet Video Distance (FVD) and temporal consistency scores demonstrate that the optimal interpolation method depends on both the specific model architecture and the nature of the content being interpolated.

For interpolations between similar frames, linear interpolation often achieves results comparable to more sophisticated methods while requiring significantly less computation. However, for longer interpolation paths or between semantically distant concepts, spherical interpolation and learned interpolation methods show clear advantages in maintaining visual quality and temporal coherence.

Perceptual studies with human evaluators complement these quantitative metrics, revealing that perceived quality depends not only on technical measures but also on factors such as motion smoothness and semantic plausibility. These findings emphasize the importance of multi-faceted evaluation when developing and comparing interpolation techniques.

Semantic Editing Effectiveness

Controlled experiments evaluating semantic editing methods demonstrate varying degrees of success across different types of edits. Attribute manipulations that align with the model's training distribution—such as adjusting lighting or camera angles—generally achieve high-quality results with minimal artifacts. More challenging edits that require significant departures from the training distribution may produce less consistent results.

Quantitative analysis of editing precision shows that direction-based methods excel at making consistent, repeatable edits but may struggle with complex, multi-attribute modifications. Conditional manipulation approaches offer greater flexibility but require more careful tuning to achieve desired results. Hybrid methods that combine multiple techniques often provide the best balance of control and quality.

Research Finding:Our experiments indicate that the most effective manipulation strategies adapt their approach based on the specific edit being performed, using simpler methods for straightforward adjustments and more sophisticated techniques for complex transformations.

Implementation Guidelines for Researchers

Practical Considerations for Latent Manipulation

Implementing latent space manipulation in video diffusion models requires careful attention to several practical considerations. First, the choice of latent space representation significantly impacts manipulation capabilities. Models with larger latent dimensions generally offer more flexibility but may require more sophisticated manipulation techniques to achieve precise control.

Computational efficiency represents another critical consideration. Real-time or near-real-time manipulation requires optimized implementations that minimize unnecessary computations. Techniques such as caching intermediate representations, using efficient interpolation algorithms, and leveraging GPU acceleration can significantly improve performance.

Stability and robustness of manipulation operations must be carefully validated. Small perturbations in latent space should produce predictable, smooth changes in output, and the system should gracefully handle edge cases such as extreme parameter values or unusual combinations of edits. Comprehensive testing across diverse scenarios helps identify and address potential failure modes.

Integration with Existing Architectures

Integrating latent manipulation capabilities into existing video diffusion architectures requires thoughtful design decisions. The manipulation interface should be modular and extensible, allowing researchers to easily experiment with different manipulation strategies without requiring extensive modifications to the core model.

Compatibility with standard training procedures is essential for practical adoption. Manipulation techniques should work with models trained using conventional objectives and should not require specialized training procedures unless the benefits clearly justify the additional complexity. When specialized training is necessary, clear documentation and reference implementations facilitate adoption by the research community.

Version control and reproducibility considerations are particularly important for research applications. Detailed logging of manipulation parameters, random seeds, and model versions ensures that results can be reliably reproduced. Standardized evaluation protocols enable fair comparisons between different manipulation approaches and facilitate cumulative progress in the field.

Future Directions and Open Challenges

Advancing Temporal Consistency

Despite significant progress, maintaining perfect temporal consistency during latent manipulation remains an open challenge. Future research directions include developing more sophisticated temporal models that better capture long-range dependencies, exploring novel architectures that explicitly encode temporal relationships in latent space, and investigating hybrid approaches that combine learned and analytical methods for ensuring consistency.

Emerging techniques such as neural ordinary differential equations (Neural ODEs) show promise for modeling continuous temporal dynamics in latent space. These approaches could enable smoother, more natural interpolations and provide better theoretical foundations for understanding temporal evolution in video diffusion models.

Scaling to Higher Resolutions and Longer Videos

As video generation capabilities advance toward higher resolutions and longer durations, latent manipulation techniques must scale accordingly. This scaling presents both computational and algorithmic challenges. Hierarchical latent representations that capture information at multiple temporal and spatial scales may provide a path forward, enabling efficient manipulation of long, high-resolution videos.

Research into efficient attention mechanisms and sparse manipulation strategies will be crucial for making latent manipulation practical at scale. Techniques that identify and focus computational resources on the most important regions of latent space could dramatically improve efficiency without sacrificing quality.

Improving Controllability and User Interfaces

The ultimate goal of latent manipulation research is to provide intuitive, powerful control over video generation. Future work should focus on developing user interfaces that make sophisticated manipulation techniques accessible to non-experts while still providing the precision required by professional users. This includes exploring novel interaction paradigms, developing intelligent assistance systems that suggest appropriate manipulations, and creating comprehensive toolkits that integrate multiple manipulation approaches.

Machine learning techniques that learn user preferences and adapt manipulation strategies accordingly could significantly improve usability. By observing how users interact with the system and what types of edits they perform, these adaptive systems could provide increasingly personalized and efficient control over video generation.

Conclusion

Latent space manipulation represents a powerful paradigm for controlling video diffusion models, offering researchers unprecedented capabilities for semantic editing, interpolation, and fine-grained control over generated content. This comprehensive guide has explored the theoretical foundations, practical techniques, and experimental methodologies that underpin effective latent manipulation.

From basic interpolation strategies to sophisticated multi-modal conditioning approaches, the field has developed a rich toolkit of techniques for navigating and manipulating latent representations. Understanding the relationship between latent dimensions and visual attributes, implementing robust manipulation algorithms, and carefully evaluating results through both quantitative metrics and perceptual studies are all essential components of successful research in this area.

As video generation technology continues to advance, latent manipulation techniques will play an increasingly important role in making these powerful models accessible and controllable. The open challenges and future directions outlined in this guide point toward exciting opportunities for continued innovation, from improving temporal consistency to scaling to higher resolutions and developing more intuitive user interfaces.

For researchers working with video diffusion architectures, mastering latent space manipulation techniques is essential for pushing the boundaries of what's possible in AI-driven video generation. By building on the foundations laid out in this guide and contributing to ongoing research efforts, the community can continue to advance the state of the art and unlock new creative possibilities in video generation technology.